HSO AI Readiness Assessment

Start your AI journey with this proven approach to adoption

Gartner predicts that through 2026, organizations will abandon 60% of AI projects - not because AI doesn't work, but because they weren't clear on what they were actually trying to prove.

The difference between AI initiatives that scale and those that quietly get shelved often comes down to how the proof of concept was designed from the start: the right scope, the right data, the right question.

This guide breaks down what an AI proof of concept is, how it differs from a prototype, pilot, or MVP, and what it takes to run one that gives you a real answer.

What Is an AI Proof of Concept (PoC)?

An AI proof of concept is a bounded, time-limited experiment designed to answer one question: can this AI approach work for this specific problem, in this specific context, with this data?

It is not a product. It is not a demo to present at a board meeting. It is a structured test of a hypothesis - designed to produce a decision.

The output of a well-run POC isn't a working application; it's a clear answer. Should we invest further in this approach, or redirect resources before committing serious budget? A well-scoped POC delivers that answer in four to eight weeks, at minimum cost and with maximum clarity.

A POC is not:

Each of those things is valuable in the right context. None of them is a POC.

AI POC vs Prototype vs MVP vs Pilot: What's the Difference?

These four terms are used interchangeably in most organizations. They shouldn't be - each stage answers a different question and carries a different level of investment and risk.

Confusing a POC with an MVP is a common causes of early AI project failure. Stakeholders expect a production-ready product; the team delivers a technical feasibility test. The result is frustration, misaligned expectations, and a project that gets cancelled for the wrong reasons.

| Stage | Primary Question | Audience | Data Environment | Typical Duration | Success Metric |

|---|---|---|---|---|---|

| Proof of Concept (POC) | Can this be done? | Internal technical reviewers, business sponsor | Sample or synthetic data | 4-8 weeks | Feasibility confirmed; Go/No-Go decision |

| Prototype | What will it look like? | Design teams, select users | Mock or limited read-only data | 2-4 weeks | UX usability and stakeholder understanding |

| MVP | Will people use it? | Early adopters, specific internal team | Production data (limited scope) | 3-6 months | Usage, retention, or revenue generation |

| Pilot | Will it break at scale? | A segment of real users | Live production data, full integration | 3-6 months | System stability and full rollout readiness |

The transition between these stages is where most AI projects fall apart. A POC might prove that an LLM can summarize a contract with 90% accuracy - but the subsequent MVP phase might reveal that the cost of running that query at scale makes the solution economically unviable.

What Makes AI POCs Different from Traditional Software POCs?

Traditional software POCs test whether something can be built. AI POCs test whether a probabilistic system can be trusted - and that's a harder question to answer.

In traditional software, a POC is largely a binary check: does System A communicate reliably with System B? The code either works or it doesn't. If it works in the test, it works in production.

AI systems don't work that way. They are probabilistic. The same prompt can produce different outputs on different days, with different phrasing, or against slightly different data. A AI model might perform well on your sample dataset and fail on real production data. It might be accurate 90% of the time - and wrong in ways that matter the other 10%.

This means AI POCs require a fundamentally different evaluation approach:

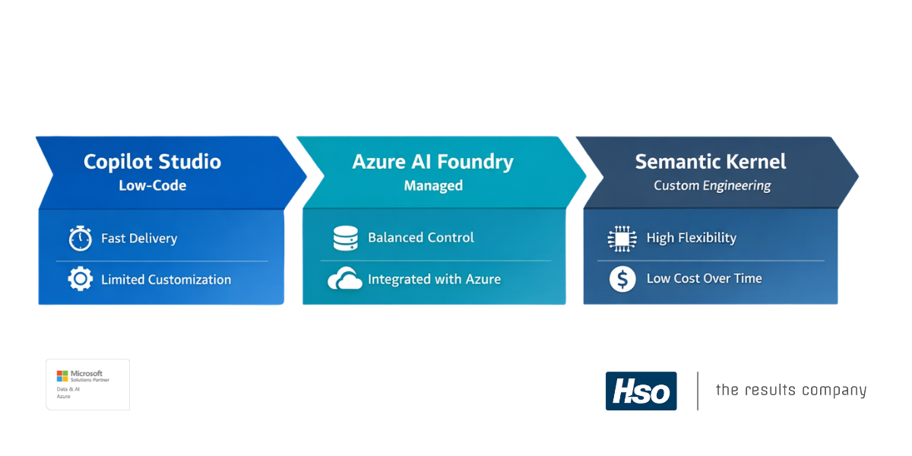

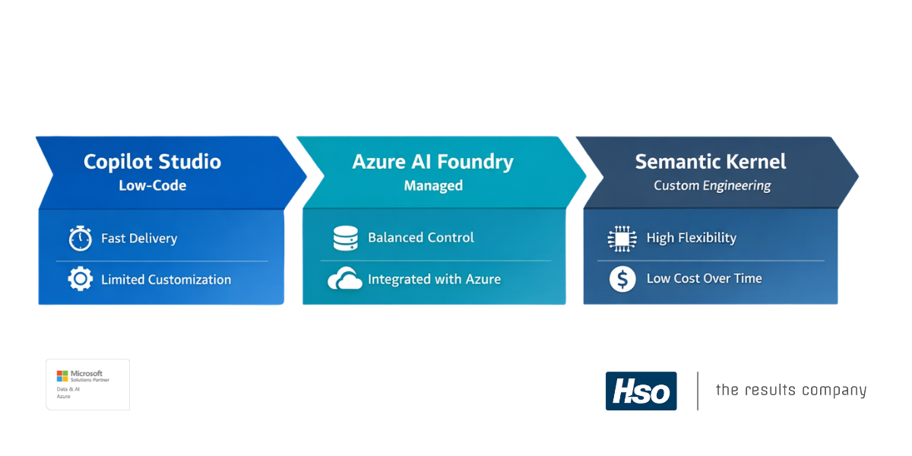

When developing AI agents or RAG applications, selecting the appropriate tooling is critical to balancing flexibility, cost, and operational complexity.

Why Run an AI POC? The Case for De-Risking AI

AI projects fail for four predictable reasons. A well-scoped proof of concept surfaces all four before you've committed serious resources.

Up to 70-80% of AI initiatives never reach production. They stall in what the industry calls "POC Purgatory" - technically functional in a sandbox, but unable to clear the bar for business viability, data quality, or organizational readiness. The POC is the mechanism that prevents you from discovering that bar at the wrong point in the investment cycle.

A well-designed AI POC tests four risks in parallel:

Miss any one of these, and the project fails - at a stage where the cost of failure is far higher than it would have been during a four-week POC.

How to Select the Right Use Case for an AI POC

The most technically impressive use case is rarely the right starting point. The right use case sits at the intersection of high business value and high data readiness.

A AI POC that tests a complex, multi-system agentic workflow against data that doesn't yet exist will teach you nothing useful. A POC that tests a focused hypothesis against clean, accessible data will give you a defensible Go/No-Go in four weeks.

Research from McKinsey indicates indicates that approximately 75% of the economic value of generative AI concentrates in four business functions, with an estimated annual value between $2.6 trillion and $4.4 trillion.

Prioritizing these areas maximizes the likelihood that a successful AI POC leads to meaningful ROI.

POC Starting Points HSO Recommends

HSO regularly recommends these use cases as high-value, high-feasibility starting points for enterprise AI POCs. In fact, HSO has ready-made AI agents built to solve some of these exact problems. Each has a tested hypothesis, known data requirements, and clear success criteria.

Running an AI POC: An 8-Step Playbook

A structured POC process turns an experiment into a defensible business decision.

The most common reason POCs produce no useful output is that they were never structured as an experiment. They started with enthusiasm and ended with "it kind of works." The following eight steps produce a decision, not a demo.

Start with a measurable KPI, not a feature wishlist. "We want to use AI for customer support" is not a testable hypothesis. "We want to test whether AI triage can handle 40% of incoming tickets with >90% accuracy" is. One of them produces a Go/No-Go signal. The other produces a prototype.

One use case. One dataset. One question. Every additional use case added at this stage doubles the complexity and halves the clarity of the output. If the first hypothesis proves positive, you'll have the foundation to run the next POC in half the time.

Data readiness is the most common POC killer. Before any build starts, audit your data: Can you access it? Is it clean enough to test against? Does it contain PII that needs to be handled before it enters the POC environment? Is there a sufficient volume to validate the hypothesis?

If the answer to any of these is unclear, the data assessment is your first deliverable - not the AI build.

HSO recommends matching tooling to the fidelity the POC requires - not defaulting to the most complex option available:

Security is not a final step. Define roles and access controls before the first resource is provisioned.

HSO's guidance is clear: request only the roles you need, enforce least-privilege access, and keep production data out of the sandbox unless it has been properly AI governed and anonymized. This is not just good practice - it is how you avoid a compliance incident mid-POC.

Set your thresholds before you see any results. What accuracy level constitutes a pass? What is the maximum acceptable latency for the use case? What cost-per-query makes the solution economically viable? What user satisfaction score would confirm adoption?

Defining these after seeing results is not evaluation - it's post-hoc justification.

Examples:

Treat the POC environment as ephemeral and codified. Using Bicep and Azure Verified Modules means the environment is reproducible: if the POC succeeds, you can rebuild and harden it for production without starting from scratch. An environment built by hand cannot be audited, replicated, or trusted at scale.

An AI model that hits 92% accuracy on a test set but doesn't reduce processing time or operating costs has not proved its value. Always map technical metrics to business outcomes: accuracy to first-contact resolution rate, latency to user adoption, cost-per-query to cost-per-transaction saved.

The stakeholders who fund the next phase will ask about the business number - not the score.

HSO AI Readiness Assessment

Start your AI journey with this proven approach to adoption

When AI POCs Fail - and Why

Most AI POC failures are not random. They follow predictable patterns - and two high-profile examples make those patterns impossible to ignore.

The failures that attract attention are rarely pure technical disasters. They are the result of applying the wrong process to a problem that required rigor: insufficient scoping, no real evaluation criteria, and operational conditions that were never properly tested.

McDonald's deployed IBM Watson-powered AI order-taking to more than 100 US locations. The system was removed in 2024 after a string of failures - including orders being misheard and incorrectly processed - became widely documented.

The technical limitations were entirely foreseeable. The system struggled with accents, competing background noise, and complex or modified orders. None of these conditions were adequately tested before rollout. A voice AI that performs acceptably in a quiet environment is a fundamentally different problem from one operating in a fast-food drive-thru with ambient noise, dialect variation, and real menu complexity.

The lesson: Operational conditions are not optional POC scope. If the use case involves real-world noise, edge cases, or complex input variation, those must be in the test - not discovered after rollout.

Klarna deployed an AI assistant that handled 2.3 million conversations - two-thirds of their total customer service volume - within its first month. The system performed the equivalent work of 700 full-time agents while customer satisfaction scores held steady.

The reason it worked is straightforward: Klarna chose a single, well-scoped use case with clean data from years of customer interactions, defined a clear success metric (resolution rate), and phased the rollout. They did not attempt to automate all of customer service at once. They proved one hypothesis and scaled from there.

The lesson: Narrow scope, clean data, and a clear success metric produce a POC that answers the question. The Klarna approach is not sophisticated - it's disciplined.

HSO Perspective: Building AI POCs That Actually Scale

HSO's approach to AI POCs starts with the business problem, not the technology - and uses the Microsoft stack to build reproducible, governed environments that are ready to scale if the POC succeeds.

The most expensive mistake in AI is building something impressive that can't be repeated, audited, or hardened for production. HSO structures POC engagements as if the environment might become a production system - because the ones that succeed will.

Choosing the right tooling is a strategic decision, not a default. HSO recommends matching the tooling tier to the level of fidelity and control the specific POC requires.

| Tooling Path | Best For | Trade-offs |

|---|---|---|

| Microsoft Copilot Studio (Low-code) | Knowledge worker, customer support, fast demonstrations | Fastest to proof; higher long-term operational cost; limited customization depth |

| Azure AI Foundry / Microsoft Fabric (Managed) | RAG pipelines, structured data use cases, Azure-integrated environments | Balanced flexibility and control; retains IP; integrates with existing Microsoft stack |

| Semantic Kernel / Custom Engineering | Novel agentic workflows, production-representative builds | Highest initial complexity; lowest long-term cost; requires engineering resource |

An HSO AI POC engagement produces four specific outputs:

Already know Copilot Studio is the right fit?

Explore the potential of Microsoft Copilot Studio with HSO to revolutionize your business processes, elevate client services, and nurture long-lasting trust and loyalty.

Copilot Studio POC offeringMost well-scoped AI POCs can be completed in four to eight weeks. Simpler use cases using low-code tooling against clean, accessible data can be validated in four weeks.

More complex scenarios - those involving custom AI model pipelines, time-series data, or data remediation work - typically require eight to twelve weeks. If a POC development is running longer than that, the scope has expanded or the data wasn't ready when the build started.

At minimum, you need a representative sample of the data the AI will act on, clear documentation of data ownership, and a basic quality assessment.

If you can't describe what "clean" looks like for your specific dataset, that assessment is your first task - not the AI build. Organizations that skip data readiness discovery typically spend the first half of their POC fixing data problems rather than testing their hypothesis.

A POC is a time-bounded experiment that produces a decision - not a product.

Its output is a clear Go/No-Go: proceed to MVP, iterate on the approach, or stop before committing further budget. A build engagement produces working software. A POC produces evidence.

Conflating the two leads to misaligned expectations on both sides and projects that are cancelled for the wrong reasons.

A well-scoped AI POC should cost a fraction of a full build AI implementation - because it is designed to answer a question before you commit to answering it at scale.

Actual costs vary by use case complexity, tooling tier, and data readiness, but the principle holds: spend enough to get a reliable answer, not enough to build the production system. If a POC is approaching the cost of an MVP, the scope has broken down.

For most enterprise POCs, closed models - specifically Azure OpenAI and GPT models - offer the fastest path to a working result with the governance controls large organizations require.

They need minimal infrastructure, provide built-in safety filters, and are deployable within the Azure environment with data residency options. Open-source models are worth evaluating when data privacy requirements prevent using cloud APIs, or when fine-tuning on proprietary data is central to the use case and long-term cost management is a priority.

Success is defined before the POC starts - not after the results come in.

Typical criteria include: accuracy above a defined threshold (established through domain expert review), latency within acceptable limits for the use case, cost-per-query within the economic model, and at least one business KPI moving in the right direction.

If success criteria are only defined after seeing results, the POC has been run as a demo - not as an experiment.

We, and third parties, use cookies on our website. We use cookies to keep statistics, to save your preferences, but also for marketing purposes (for example, tailoring advertisements). By clicking on 'Settings' you can read more about our cookies and adjust your preferences. By clicking 'Accept all', you agree to the use of all cookies as described in our privacy and cookie policy.

Purpose

This cookie is used to store your preferences regarding cookies. The history is stored in your local storage.

Cookies

Location of Processing

European Union

Technologies Used

Cookies

Expiration date

1 year

Why required?

Required web technologies and cookies make our website technically accessible to and usable for you. This applies to essential base functionalities such as navigation on the website, correct display in your internet browser or requesting your consent. Without these web technologies and cookies our website does not work.

Purpose

These cookies are stored to keep you logged into the website.

Cookies

Location of Processing

European Union

Technologies Used

Cookies

Expiration date

1 year

Why required?

Required web technologies and cookies make our website technically accessible to and usable for you. This applies to essential base functionalities such as navigation on the website, correct display in your internet browser or requesting your consent. Without these web technologies and cookies our website does not work.

Purpose

This cookie is used to submit forms to us in a safe way.

Cookies

Location of Processing

European Union

Technologies Used

Cookies

Expiration date

1 year

Why required?

Required web technologies and cookies make our website technically accessible to and usable for you. This applies to essential base functionalities such as navigation on the website, correct display in your internet browser or requesting your consent. Without these web technologies and cookies our website does not work.

Purpose

This service provided by Google is used to load specific tags (or trackers) based on your preferences and location.

Why required?

This web technology enables us to insert tags based on your preferences. It is required but adheres to your settings and will not load any tags if you do not consent to them.

Purpose

This cookie is used to store your preferences regarding language.

Cookies

Why required?

We use your browser language to determine which language to show on our website. When you change the default language, this cookie makes sure your language preference is persistent.

Purpose

This service is used to track anonymized analytics on the HSO.com application. We find it very important that your privacy is protected. Therefore, all data is collected and stored on servers owned by HSO with no third-party dependencies. This cookie helps us collect data from HSO.com so that we can improve the website. Examples of this are: it allows us to track engagement by page, measuring various events like scroll-depth, time on page and clicks.

Cookie

Purpose

This cookie enables HSO to run A/B tests across the HSO.com application. A/B testing (also called split testing) is comparing two versions of a web page to learn how we can improve your experience. All data is collected and stored on servers owned by HSO with no third-party dependencies.

Purpose

With your consent, this website will load Google Analytics to track behavior across the site.

Cookies

Purpose

With your consent, this website will load the Microsoft Clarity script, which helps us understand how people use the site. The cookies set by Clarity collect session-level data like how the visitor landed on the site, which pages they viewed, their language preference, and even their general location. This data powers Clarity’s features like heatmaps and session recordings, helping us see which parts of a page get attention and where users drop off. The goal isn’t to track individuals, but to understand patterns that can improve the user experience. Learn more about Microsoft Clarity cookies here.

Cookies

Technologies Used

Cookies

Purpose

With your consent, this website will load the Google Advertising tag which enables HSO to report user activity from HSO.com to Google. This enables HSO to track conversions and create remarketing lists based on user activity on HSO.com.

Possible cookies

Please refer to the below page for an updated view of all possible cookies that the Google Ads tag may set.

Cookie information for Google's ad products (safety.google)

Technologies Used

Cookies

Purpose

With your consent, we use IPGeoLocation to retrieve a country code based on your IP address. We use this service to be able to trigger the right web technologies for the right people.

Purpose

With your consent, we use Leadfeeder to identify companies by their IP-addresses. Leadfeeder automatically filters out all users visiting from residential IP addresses and ISPs. All visit data is aggregated on the company level.

Cookies

Purpose

With your consent, this website will load the LinkedIn Insights tag which enables us to see analytical data on website performance, allows us to build audiences, and use retargeting as an advertising technique. Learn more about LinkedIn cookies here.

Cookies

Purpose

With your consent, this website will load the Microsoft Advertising Universal Event Tracking tag which enables HSO to report user activity from HSO.com to Microsoft Advertising. HSO can then create conversion goals to specify which subset of user actions on the website qualify to be counted as conversions. Similarly, HSO can create remarketing lists based on user activity on HSO.com and Microsoft Advertising matches the list definitions with UET logged user activity to put users into those lists.

Cookies

Technologies Used

Cookies

Purpose

With your consent, this website will load the Microsoft Dynamics 365 Marketing tag which enables HSO to score leads based on your level of interaction with the website. The cookie contains no personal information, but does uniquely identify a specific browser on a specific machine. Learn more about Microsoft Dynamics 365 Marketing cookies here.

Cookies

Technologies Used

Cookies

Purpose

With your consent, we use Spotler to measures more extensive recurring website visits based on IP address and draw up a profile of a visitor.

Cookies

Purpose

With your consent, this website will show videos embedded from Vimeo.

Technologies Used

Cookies

Purpose

With your consent, this website will show videos embedded from Youtube.

Cookies

Technologies Used

Cookies

Purpose

With your consent, this website will load the Meta-pixel tag which enables us to see analytical data on website performance, allows us to build audiences, and use retargeting as an advertising technique through platforms owned by Meta, like Facebook and Instagram. Learn more about Facebook cookies here. You can adjust how ads work for you on Facebook here.

Cookies

Purpose

With your consent, we use LeadInfo to identify companies by their IP-addresses. LeadInfo automatically filters out all users visiting from residential IP addresses and ISPs. These cookies are not shared with third parties under any circumstances.

Cookies

Purpose

With your consent, we use TechTarget to identify companies by their IP address(es).

Cookies

Purpose

This enables HSO to personalize pages across the HSO.com application. Personalization helps us to tailor the website to your specific needs, aiming to improve your experience on HSO.com. All data is collected and stored on servers owned by HSO with no third-party dependencies.

Purpose

With your consent, we use ZoomInfo to identify companies by their IP addresses. The data collected helps us understand which companies are visiting our website, enabling us to target sales and marketing efforts more effectively.

Cookies